javascript templates with moustache and icanhaz.js

Templating is an important part of the view side of model/view/controller architecture. In fact, most web apps are really model-template applications.

In the old days we used to print out html that was generated by hand. You started with something like:

print "Content-type: text/html\n\n";And it got worse from there. You were sunk if you forgot that second newline. Templating was a problem that had to be solved early on and now we have files with snippets of html and tokens to be replaced. Typically, they have a syntax like:

Hello,If you pass the template a user object with a name attribute the token would be replaced with the correct information and rendered into useful html without much fuss. As we move more of our application logic into the client side to be executed in the browser, a javascript templating engine is necessary. I have used jstemplate with success, but it shoehorns the template structure into html tag attributes which makes it difficult to read and understand. I much prefer curly bracy tokens. People seem to like the jquery template plugin, but it doesn't have a great method for packaging templates and it looks like you have to store them as js strings. That isn't bad, but for multiline templates you have to escape newlines which leads to ugly code. mustache is an awesome templating engine with support in many languages including javascript. Someone went to the trouble to create an excellent method of loading templates and wrapped mustache.js into a package with a slightly silly name called icanhaz.js. Icanhaz makes it almost painless to retrieve and render template assets. The icanhaz.js website has an awesome introduction and it is simple to use. Lately I have been finding that the best way to wrap my brain around a new topic is to write some unit tests. Ideally I would like to use mustache templates with backbone.js, so I am going to build on the tests from my last entry by incorporating icanhaz.js. First let's grab the code from github and sock it away into our project. I'm not spending too much time with the layout for this test, so I'm just going to drop the icanhaz.js into the src folder.

git clone git://github.com/andyet/ICanHaz.js

cd ICanHaz.js/

cp ICanHaz.js/ ~/project/src/

Now we add a reference to this file to SpecRunner.html, so that the library loading component looks something like:

Note that the order of loading these scripts is important because these libraries are dependent on each other. Icanhaz requires jquery, so we're going to load it as well. Honestly, jquery is nice to have for any project. Loading it from google is nice and fast. If you fear the cloud, you could load it locally as well. For simplicities sake I am going to start with loading a template from a string and giving it a javascript object to render. This method isn't covered quite as well on the icanhaz.js website, but it's a useful starting point for understanding the basics of mustache. Let's add a test:

describe("icanhaz templates", function() {

it("should be able to load template", function() {

ich.addTemplate('model', '

');

var snippet = ich.model({name: 'Alf Prufrock'});

expect(snippet.html()).toEqual('Alf Prufrock');

expect(snippet.text()).toEqual("Alf Prufrock");

});

});

This code uses the ich method addTemplate to build a template named 'model' from the given string. This template names becomes the name of a method on the ich object which takes an object or object literal containing the values to be interpolated and rendered as html. The return value is already a jquery object, so we can use the html() and text() directly. Notice that the html of the rendered template doesn't include the surround div that we passed to icanhaz.

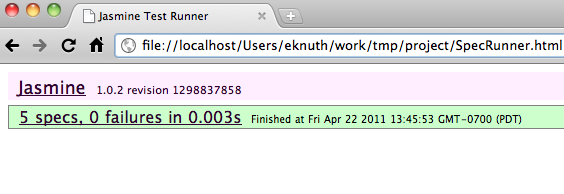

This template can be used any number of times to render this little bit of html. It could also be an entire web page or even bits and pieces called partials. When we load SpecRunner.html, all tests pass, so we know that the code is doing what we think it should be doing.